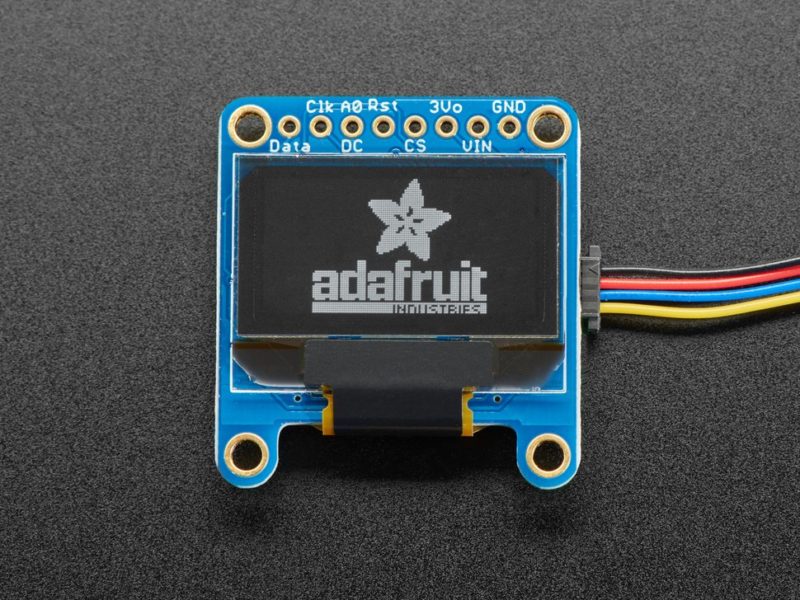

There are a variety of components that need to be emulated to fully emulate the Arduboy. I decided to start with the display, since without that I wouldn’t be able to see much of anything.

(more…)Blog

-

An Arduous Endeavor (Part 1): Background and Yak Shaving

I recently picked up a low-powered handheld gaming device which has gotten me excited about playing retro games again. Part of the fun of such devices (for me, anyway) is tweaking them and getting them set up just right, and doing that has involved exploring the various discords related to handheld gaming. Which led me to discovering a new-ish handheld system that I hadn’t heard of before: the Arduboy.

(more…) -

Deleting kafka topics from a consumer group

Kafka consumer groups let you keep track of the latest offsets consumed for a given topic/partition. We ran into an issue recently when we started monitoring the lag for a given consumer group using kafka-lag-exporter, though: if your consumer group has ever committed an offset for a given topic, it stays there as long as the consumer group exists.

We tried deleting it using the kafka-consumer-groups command line tool, but we got a message saying that the operation wasn’t supported by our broker (we are using MSK).

So what to do? Well, I first started by looking into poking around the __consumer_offsets topic, and then noped out of there when I saw that it stores data and keys in some binary format that you need to use a Java class to parse.

The next idea I had was to delete the consumer group, and recreate it, leaving out the offensive topic(s). But we subscribed to a lot of topics! Well, a little bash can go a long way.

#!/usr/bin/env bash set -o errexit set -o nounset export kafka_server=your-kafka-server:9092 export consumer_group=your-consumer-group export skiptopics=("old-unused-topic1" "old-unused-topic2") containsElement () { local e match="$1" shift for e; do [[ "$e" == "$match" ]] && return 0; done return 1 } current_offsets=$(docker run --rm --volume ~/kafka-ssl.properties:/config.properties --entrypoint bin/kafka-consumer-groups.sh solsson/kafka --bootstrap-server $kafka_server --group $consumer_group --describe | tail -n +3 | awk '{ print $2, $4}') echo "Current offsets:" echo "$current_offsets" ( set -o xtrace; docker run --rm --volume ~/kafka-ssl.properties:/config.properties --entrypoint bin/kafka-consumer-groups.sh solsson/kafka --bootstrap-server $kafka_server --group $consumer_group --delete ) while IFS= read -r line; do arr=($line) topic="${arr[0]}" if containsElement "$topic" "${skiptopics[@]}"; then continue fi offset="${arr[1]}" ( set -o xtrace; docker run --rm --volume ~/kafka-ssl.properties:/config.properties --entrypoint bin/kafka-consumer-groups.sh solsson/kafka --bootstrap-server $kafka_server --group $consumer_group --reset-offsets --topic $topic --to-offset $offset --execute ) done < <(printf '%s\n' "$current_offsets") -

Running a local Docker registry mirror

We recently moved to a mountainside, and while we are theoretically within the service area of our local cable internet provider, we have been waiting for them to get back to us about installation for over two weeks.

During that time, I’ve been doing my development work using the LTE on my phone (thanks unlimited data from Visible!). However, LTE isn’t really the fastest thing in the world, especially when you’re trying to pull a bunch of docker images as you’re testing multiple local kind clusters.

While I was waiting for another cluster to spin up, I decided to look into running a local Docker mirror. Docker publishes a recipe for this, but calling it a recipe feels a little misleading – there’s no code to copy and paste!

So here’s my recipe:

-

Get a registry container running:

$ docker run --detach --env "REGISTRY_PROXY_REMOTEURL=https://registry-1.docker.io" --publish=5000:5000 --restart=always --volume "$HOME/docker-registry:/var/lib/registry" --name registry registry:2 -

Update your docker daemon JSON configuration to include the following:

{ "registry-mirrors": ["http://localhost:5000"] }

-

-

Automatically run a task every two weeks without cron

(more…)I use fish as my go-to shell – it’s fast, the syntax is more sane than

bash, and there are a lot of great plugins for it.One plugin I use a lot is jethrokuan’s port of z, which allows for very quick directory jumping.

Unfortunately, sometimes I reorganize my directories, and

zcan get confused and try to jump into a no longer existent directory.No worries!

zthought of that, and provides thez --cleancommand which removes nonexistent directories from its lists.But I never remember to run that. Wouldn’t it be nice if I could just have that run automatically every two weeks or so?

-

Bring back “Always open these types of links in the associated app” to Google Chrome

I use Zoom for my work a lot. I pass around Zoom links like they’re popcorn being shared at a movie theater. I’ve got them in my calendar, in Slack, and in emails.

I used to be able to click on a link, and the link would open in my default web browser (Google Chrome), and then that would open up the Zoom application.

In Google Chrome 77, Google changed that. Now, I have one more button to click to confirm that I want to open up the Zoom application. There used to be a checkbox labeled Always open these types of links in the associated app, but that went away.

However, there is a hidden preference (intended for policy administration, but usable by all) that can bring it back! Windows users can add a registry entry, but I’m on a Mac. Here’s how a Mac user can do it:

- Quit Google Chrome

- Open up Terminal

- Run the following code at the terminal prompt:

defaults write com.google.Chrome ExternalProtocolDialogShowAlwaysOpenCheckbox -bool true

- Restart Google Chrome

Now, when you try to open links, that checkbox will be back. Check it, and you’ll have less buttons to click in the future!